Purdue MA 26500 Fall 2023 Midterm I Solutions

This is the 3rd study notes post for the college linear algebra course. Here is the review of Purdue MA 26500 Fall 2023 midterm I. I provide solutions to all exam questions as well as concise explanations.

Introduction

Purdue University Department of Mathematics provides an introductory-level linear algebra course MA 26500 every semester. Undergraduate students of science and engineering majors taking this course would gain a good mathematical foundation for their advanced studies in machine learning, computer graphics, control theory, etc.

Disclosure: This blog site is reader-supported. When you buy through the affiliate links below, as an Amazon Associate, I earn a tiny commission from qualifying purchases. Thank you.

MA 26500 textbook is Linear Algebra and its Applications (6th Edition) by David C. Lay, Steven R. Lay, and Judi J. McDonald. The authors have also published a student study guide for it, which is available for purchase on Amazon as well.

MA 26500 midterm I covers the topics in Sections 1.1 – 3.3 of the textbook. It is usually scheduled at the beginning of the seventh week. The exam format is a combination of multiple-choice questions and short-answer questions. Students are given one hour to finish answering the exam questions.

Here are a few extra reference links for Purdue MA 26500:

- Purdue Department of Mathematics Course Achive

- Purdue MA 26500 Spring 2024

- Purdue MA 26500 Exam Archive

Fall 2023 Midterm I Solutions

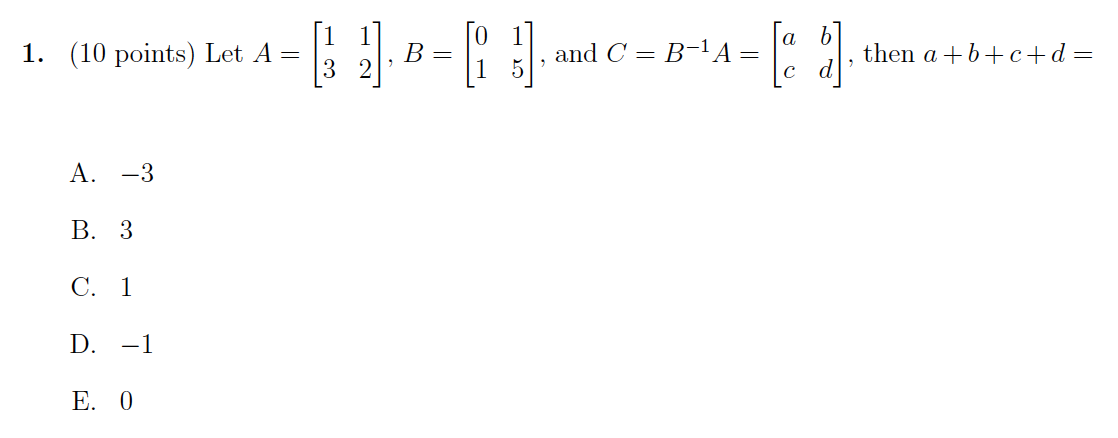

Problem 1 (10 points)

Problem 1 Solution

Because \(C=B^{-1}A\), we can left-multiply both sides by \(B\) and obtain \(BC=BB^{-1}A=A\). So \[ \begin{bmatrix}0 & 1\\1 & 5\\\end{bmatrix} \begin{bmatrix}a & b\\c & d\\\end{bmatrix}= \begin{bmatrix}1 & 1\\3 & 2\\\end{bmatrix} \] Further, compute matrix multiplication at the left side \[ \begin{bmatrix}c &d\\a+5c &b+5d\\\end{bmatrix}= \begin{bmatrix}1 & 1\\3 & 2\\\end{bmatrix} \] From here we can directly get \(c=d=1\), then \(a=-2\) and \(b=-3\). This leads to \(a+b+c+d=-3\).

The answer is A.

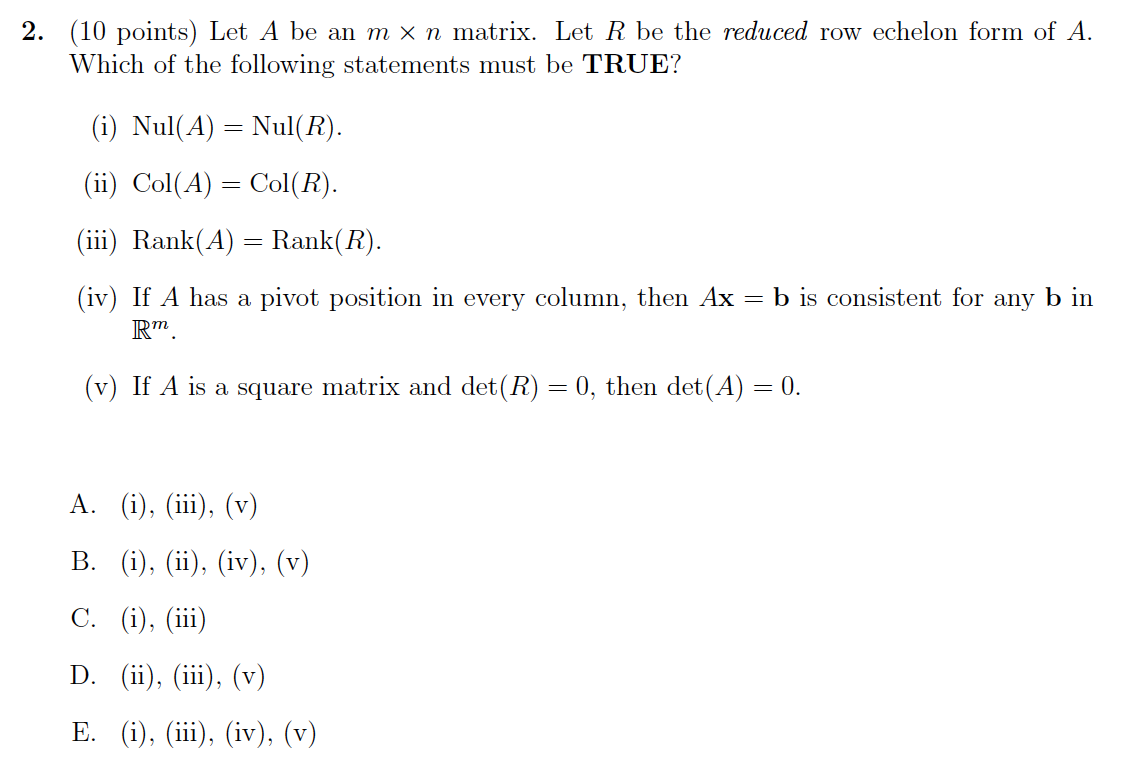

Problem 2 (10 points)

Problem 2 Solution

The reduced row echelon form has the same number of pivots as the original matrix. And the rank of a matrix \(A\) is just the number of pivot columns in \(A\). From these, we can deduce statement (iii) is true.

Per the Rank Theorem (rank \(A\) + dim Nul \(A\) = \(n\)), since \(\mathrm{Rank}(A)=\mathrm{Rank}(R)\), we obtain \(\mathrm{Nul}(A)=\mathrm{Nul}(R)\). So statement (i) is true as well.

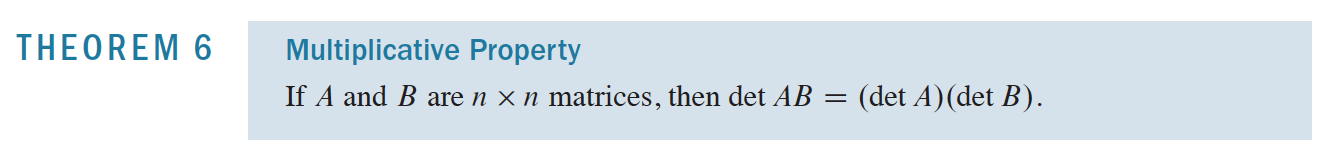

For a square matrix \(A\), suppose that transforming \(A\) to a matrix in reduced row-echelon form using elementary row operations \(E_kE_{k−1}⋯E_1A=R\). Taking the determinants of both sides, we get \(\det E_kE_{k−1}⋯E_1A=\det R\). Now, using the fact that the determinant of a product of matrices is the same as the product of the determinants of the matrices, we get that \[\det A=\frac{\det R}{\det E_1⋯\det E_k}\]

According to the description in the "Proofs of Theorems 3 and 6" part in Section 3.2 Properties of Determinants, it is proven that \(\det E\) would be either 1, -1, or a scalar. Taking all these into consideration, if \(\det R\) is zero, \(\det A\) must be zero. Statement (v) is true.

📝Notes:The reduced row echelon form of a square matrix is either the identity matrix or contains a row of 0's. Hence, \(\det R\) is either 1 or 0.

Now look back at statement (ii), the column space of the matrix \(A\) is not necessarily equal to the column space of \(R\), because the reduced row echelon form could contain a row of 0's. In such a case, the spans of these two column spaces are different.

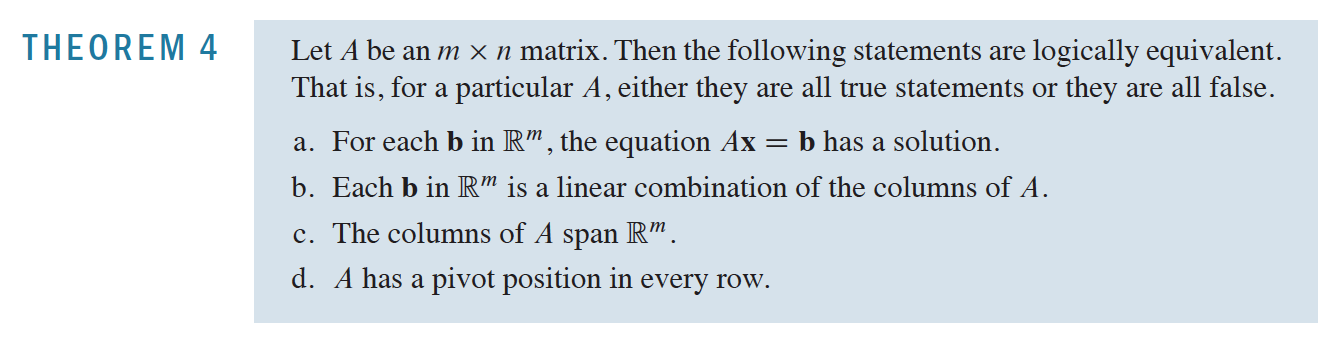

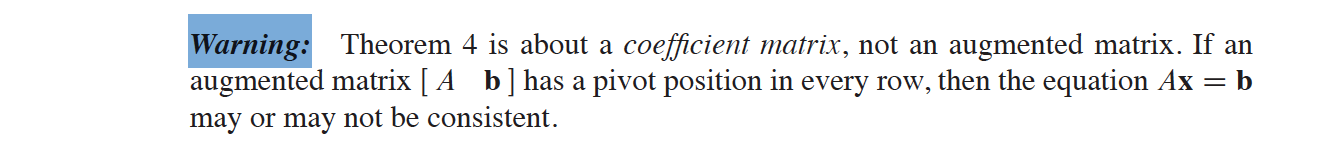

For the same reason, we can conclude that the statement (iv) is false. Referring to Theorem 4 in Section 1.4 The Matrix Operation \(A\pmb x=\pmb b\) (check the "Common Errors and Warnings" in the end), "For each \(\pmb b\) in \(\pmb R^m\), the equation \(A\pmb x=\pmb b\) has a solution" is true if and only if \(A\) has a pivot position in every row (not column).

The answer is A.

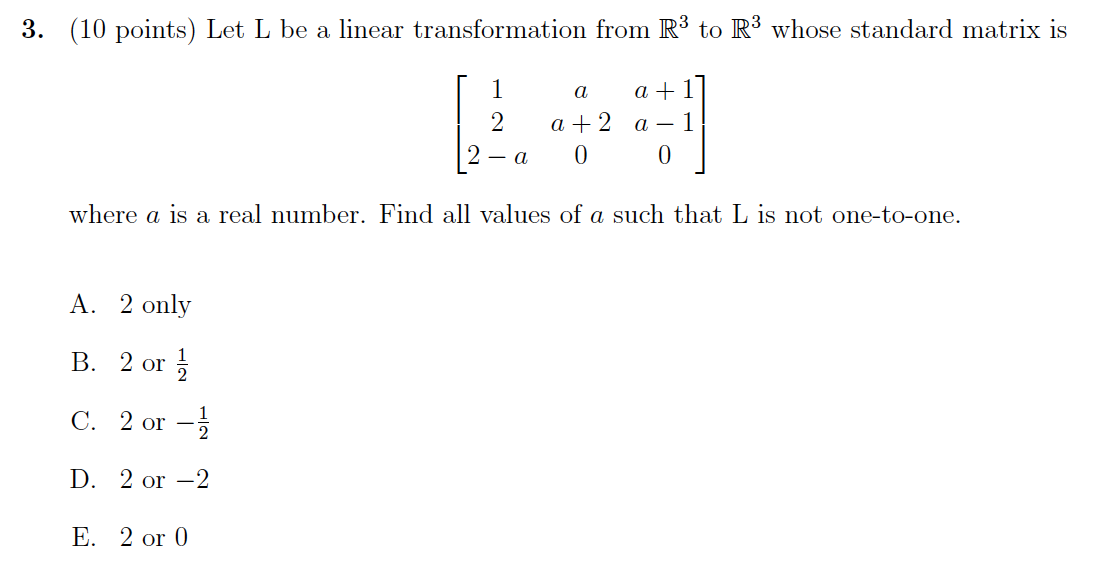

Problem 3 (10 points)

Problem 3 Solution

First, we can do row reduction to obtain the row echelon form of the standard matrix \[\begin{align} &\begin{bmatrix}1 &a &a+1\\2 &a+2 &a-1\\2-a &0 &0\\\end{bmatrix}\sim \begin{bmatrix}1 &a &a+1\\0 &-a+2 &-a-3\\2-a &0 &0\\\end{bmatrix}\sim\\ \sim&\begin{bmatrix}1 &a &a+1\\0 &-a+2 &-a-3\\0 &a(a-2) &(a+1)(a-2)\\\end{bmatrix}\sim \begin{bmatrix}1 &a &a+1\\0 &-a+2 &-a-3\\0 &0 &-4a-2\\\end{bmatrix} \end{align}\]

If \(a=2\), the 2nd column is a multiple of the 1st column, so the columns of \(A\) are not linearly independent, then the transformation would not be one-to-one (Check Theorem 12 of Section 1.9 The Matrix of a Linear Transformation).

Moreover, if \(a=-\frac{1}{2}\), the entries of the last row are all 0s. In such case, matrix \(A\) has only two pivots and \(A\pmb x=\pmb 0\) has non-trivial solutions, \(L\) is not one-to-one (See Theorem 11 of Section 1.9 The Matrix of a Linear Transformation).

So the answer is C.

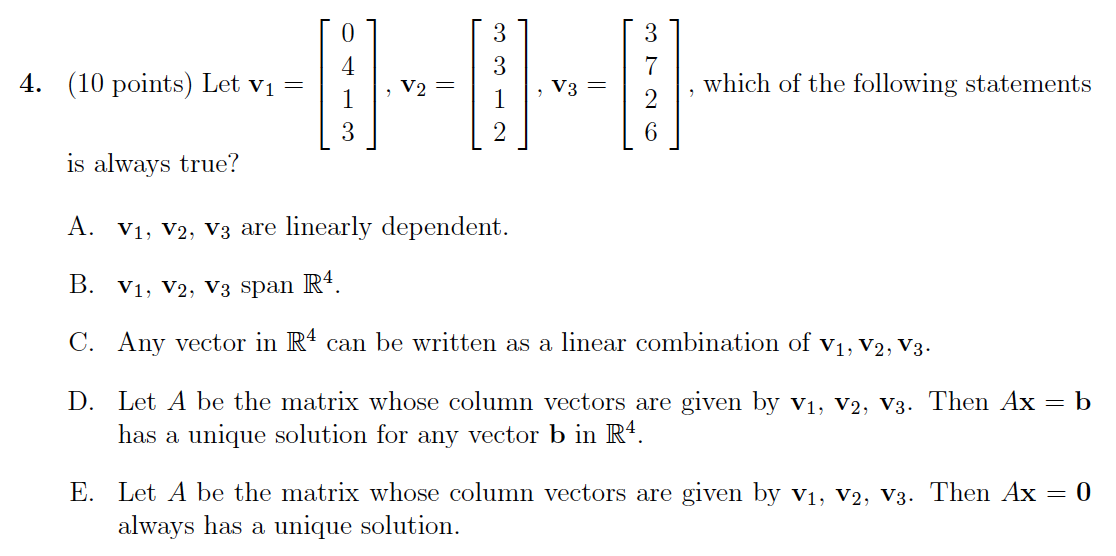

Problem 4 (10 points)

Problem 4 Solution

Statement A is wrong as none of these 3 vectors is a linear combination of the other two. They form a linearly independent set.

Statement B is wrong as we need 4 linearly independent vectors to span \(\mathbb R^4\).

Statements C and D are also wrong because B is wrong. Not all vectors in \(\mathbb R^4\) can be generated with a linear combination of these 3 vectors, and \(A\pmb x=\pmb b\) might have no solution.

Statements E is correct. It has a unique but trivial solution. Quoted from the textbook Section 1.7 Linear Independence:

The columns of a matrix \(A\) are linearly independent if and only if the equation \(A\pmb x=\pmb 0\) has only the trivial solution.

So the answer is E.

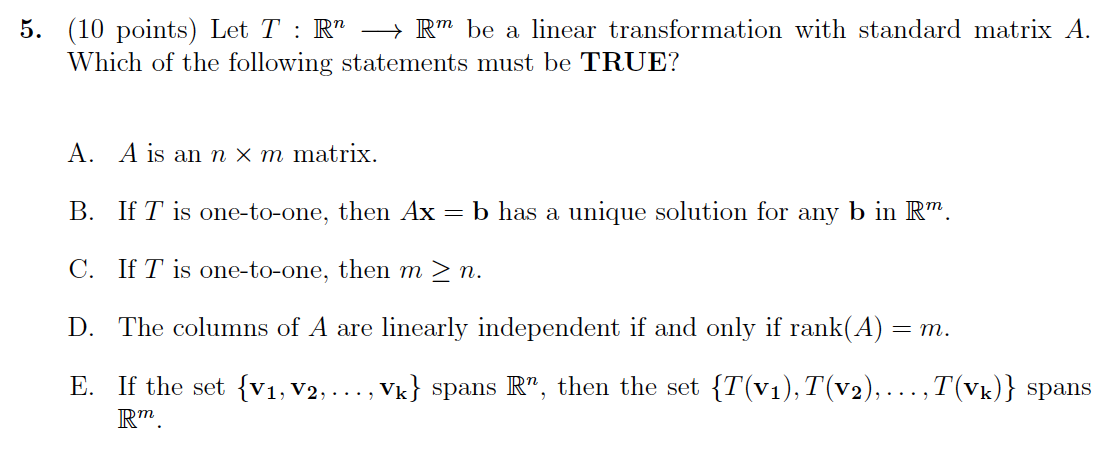

Problem 5 (10 points)

Problem 5 Solution

From the given condition, we know that \(A\) is a \(m\times n\) matrix. So statement A is wrong.

Statement B is not necessarily true since \(\pmb b\) could be outside of the range but still in the \(\mathbb R^m\) as the codomain of \(T\). Statement E is also not true for the same reason.

Statement D is wrong. Since \(m\) is the row number of the matrix \(A\), rank \(A=m\) just means the number of pivots is equal to the row number. To have the column linearly independent, we need the pivot number to be the same as the column number.

Now we have only statement C left. If \(m<n\), the column vector set is linearly dependent. But \(T\) is one-to-one if and only if the columns of \(A\) are linearly independent. So \(m<n\) cannot be true.

The answer is C.

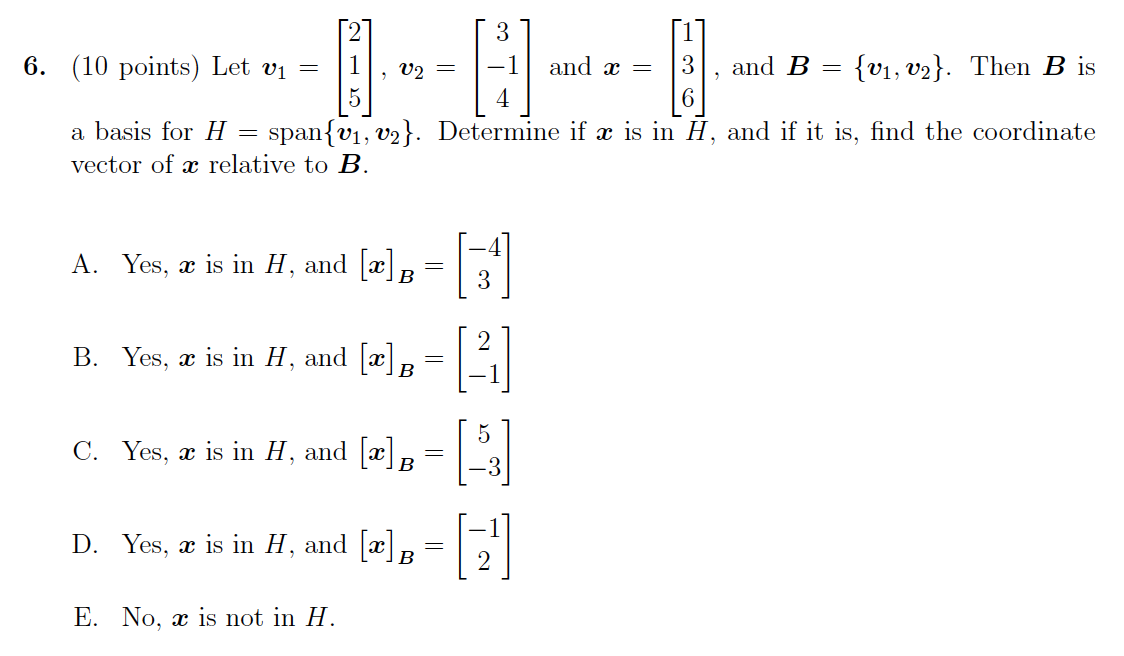

Problem 6 (10 points)

Problem 6 Solution

This is to solve the following equation system: \[ \begin{bmatrix}2 &3\\1 &-1\\5 &4\\\end{bmatrix} \begin{bmatrix}x_1\\x_2\\\end{bmatrix}= \begin{bmatrix}1\\3\\6\\\end{bmatrix} \] Let's do the row reduction with the augmented matrix \[ \begin{bmatrix}2 &3 &1\\1 &-1 &3\\5 &4 &6\\\end{bmatrix}\sim \begin{bmatrix}1 &-1 &3\\2 &3 &1\\5 &4 &6\\\end{bmatrix}\sim \begin{bmatrix}1 &-1 &3\\0 &5 &-5\\0 &9 &-9\\\end{bmatrix}\sim \begin{bmatrix}1 &-1 &3\\0 &1 &-1\\0 &0 &0\\\end{bmatrix} \]

This yields the unique solution \(x_1=2\) and \(x_2=-1\). So the answer is B.

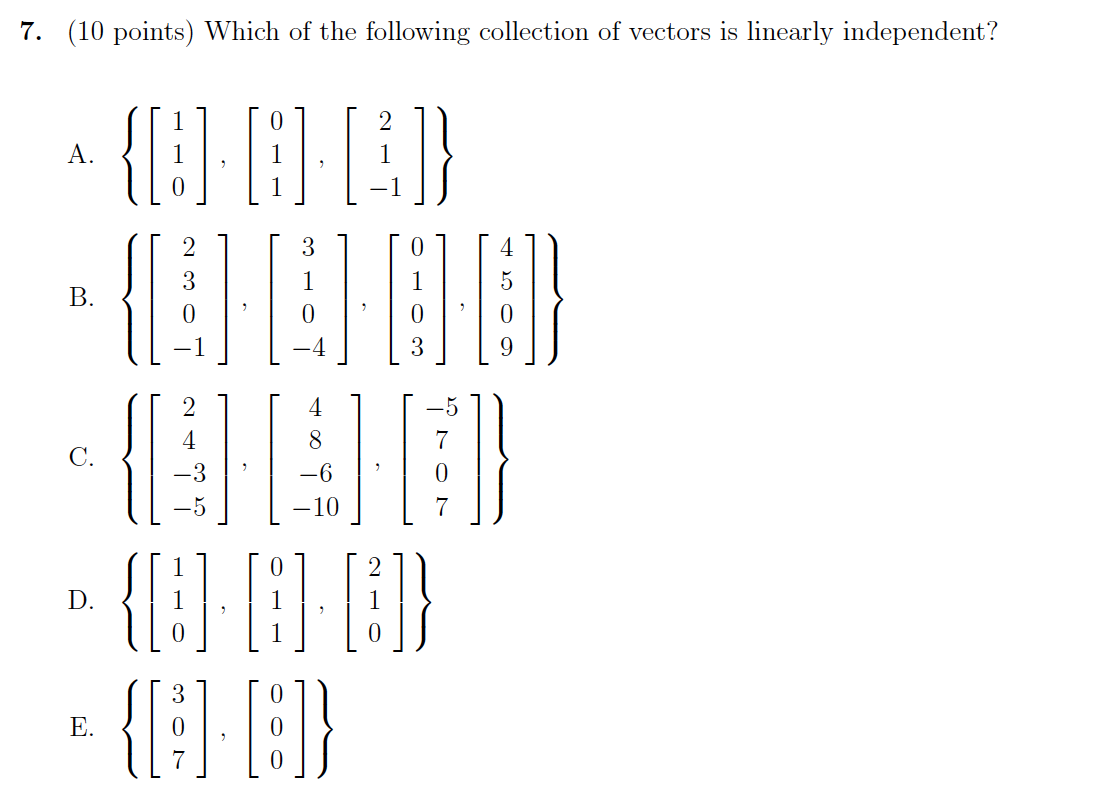

Problem 7 (10 points)

Problem 7 Solution

First, we can exclude E as it has a zero vector, and a vector set including a zero vector is always linearly dependent.

C has its column 2 equal to 2 times column 1. It is not linearly independent.

A is also wrong. It is easy to see that column 3 is equal to 2 times column 1 minus column 2.

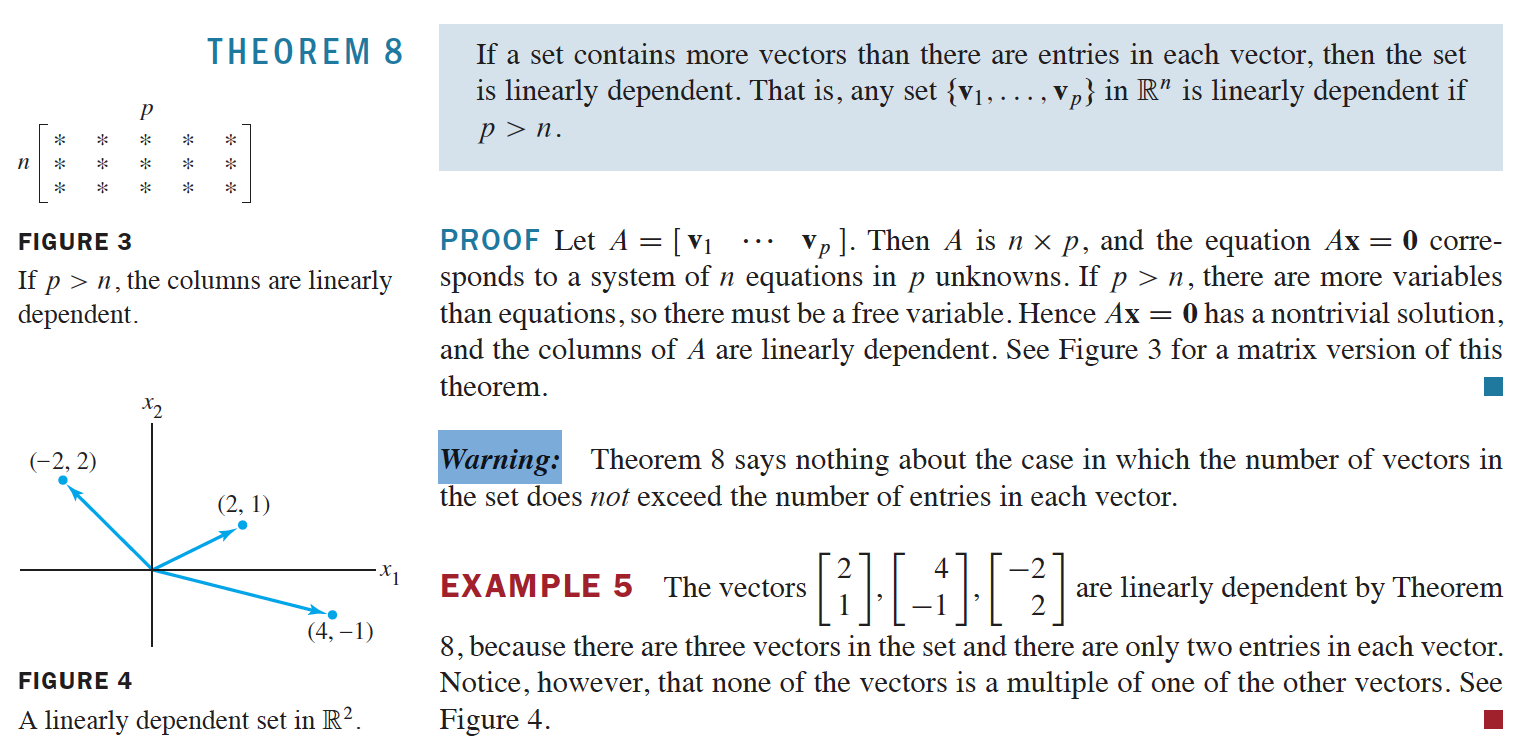

B has zeros in row 3 of all four vectors. So all the vectors have only 3 valid entries. But we have 4 vectors. Referring to Theorem 8 of Section 1.7 Linear Independence, this is equivalent to the case that 4 vectors are all in 3D space. So there must be one vector that is a linear combination of the other 3. B is not the right answer.

D can be converted to the vector set \[\begin{Bmatrix} \begin{bmatrix}1\\1\\0\end{bmatrix}, \begin{bmatrix}0\\1\\1\end{bmatrix}, \begin{bmatrix}1\\0\\0\end{bmatrix} \end{Bmatrix}\] This is a linear independent vector set since we cannot get any column by linearly combining the other two.

So the answer is D.

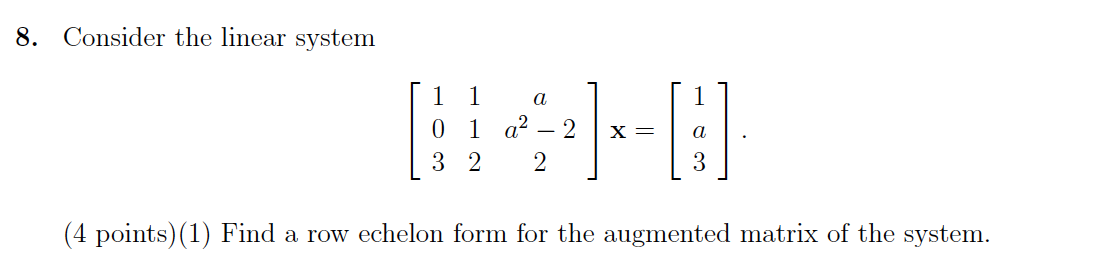

Problem 8 (10 points)

Problem 8 Solution

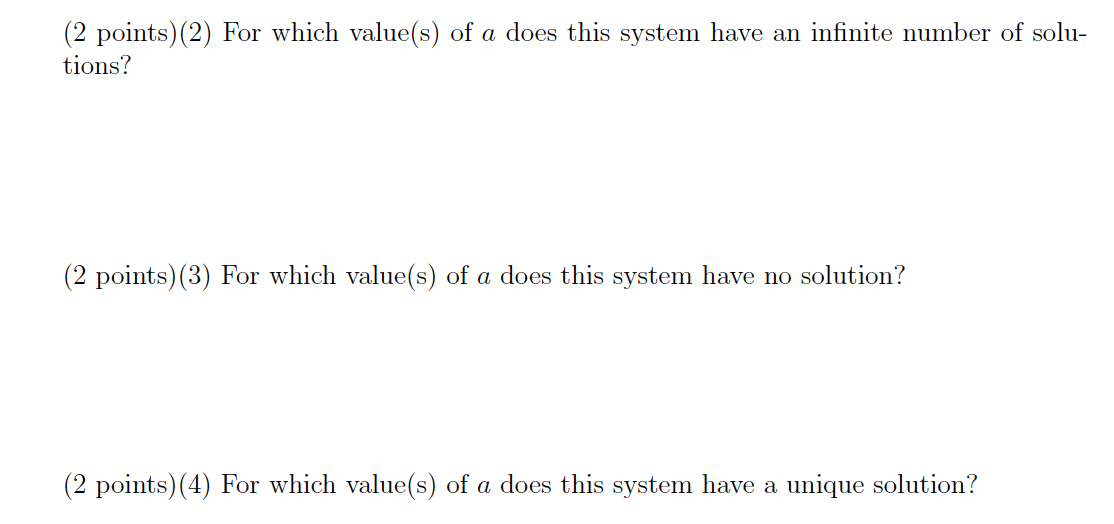

Start with the augmented matrix and do row reduction \[ \begin{bmatrix}1 &1 &a &1\\0 &1 &a^2-2 &a\\3 &2 &2 &3\\\end{bmatrix}\sim \begin{bmatrix}1 &1 &a &1\\0 &1 &a^2-2 &a\\0 &-1 &2-3a &0\\\end{bmatrix}\sim \begin{bmatrix}1 &1 &a &1\\0 &1 &a^2-2 &a\\0 &0 &a(a-3) &a\\\end{bmatrix} \]

Apparently if \(a=0\), the last row has all zero entries, the system has one free variable and there are an infinite number of solutions.

If \(a=3\), the last row indicates \(0=3\), the system is inconsistent and has no solution.

If \(a\) is neither 3 nor 0, the row echelon form shows three pivots, thus the system has a unique solution.

Problem 9 (10 points)

Problem 9 Solution

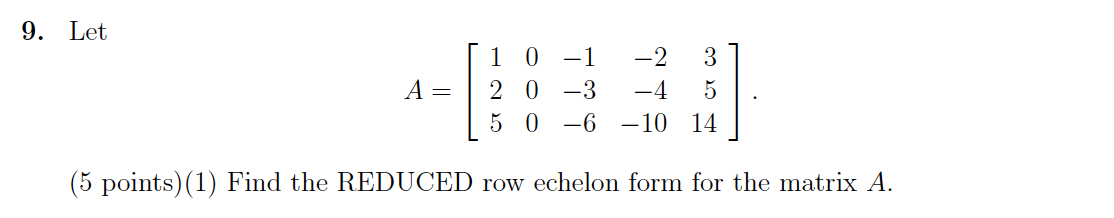

The sequence of row reduction to get the reduced row echelon form is shown below \[\begin{align} &\begin{bmatrix}1 &0 &-1 &-2 &3\\2 &0 &-3 &-4 &5\\5 &0 &-6 &-1 &14\\\end{bmatrix}\sim \begin{bmatrix}1 &0 &-1 &-2 &3\\0 &0 &-1 &0 &-1\\0 &0 &-1 &0 &-1\\\end{bmatrix}\\ \sim&\begin{bmatrix}1 &0 &-1 &-2 &3\\0 &0 &-1 &0 &-1\\0 &0 &0 &0 &0\\\end{bmatrix}\sim \begin{bmatrix}1 &0 &-1 &-2 &3\\0 &0 &1 &0 &1\\0 &0 &0 &0 &0\\\end{bmatrix}\sim \begin{bmatrix}1 &0 &0 &-2 &4\\0 &0 &1 &0 &1\\0 &0 &0 &0 &0\\\end{bmatrix} \end{align}\]

From the reduced row echelon form, we can see that there are two pivots and three free variables \(x_2\), \(x_4\), and \(x_5\). So the system \(A\pmb x=\pmb 0\) becomes \[\begin{align} x_1-2x_4+4x_5&=0\\ x_3+x_5&=0 \end{align}\]

Now write the solution in parametric vector form. The general solution is \(x_1=2x_4-4x_5\), \(x_3=-x_5\). This can be written as \[ \begin{bmatrix}x_1\\x_2\\x_3\\x_4\\x_5\end{bmatrix}= \begin{bmatrix}2x_4-4x_5\\x_2\\-x_5\\x_4\\x_5\end{bmatrix}= x_2\begin{bmatrix}0\\1\\0\\0\\0\end{bmatrix}+ x_4\begin{bmatrix}2\\0\\0\\1\\0\end{bmatrix}+ x_5\begin{bmatrix}-4\\0\\-1\\0\\1\end{bmatrix} \] So the basis for Nul \(A\) is \[\begin{Bmatrix} \begin{bmatrix}0\\1\\0\\0\\0\end{bmatrix}, \begin{bmatrix}2\\0\\0\\1\\0\end{bmatrix}, \begin{bmatrix}-4\\0\\-1\\0\\1\end{bmatrix} \end{Bmatrix}\]

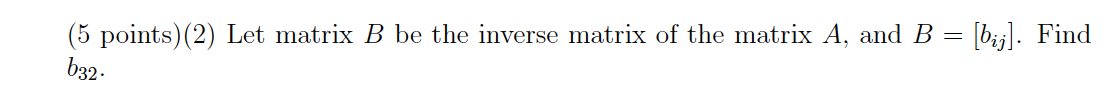

Problem 10 (10 points)

Problem 10 Solution

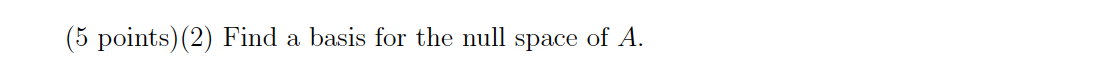

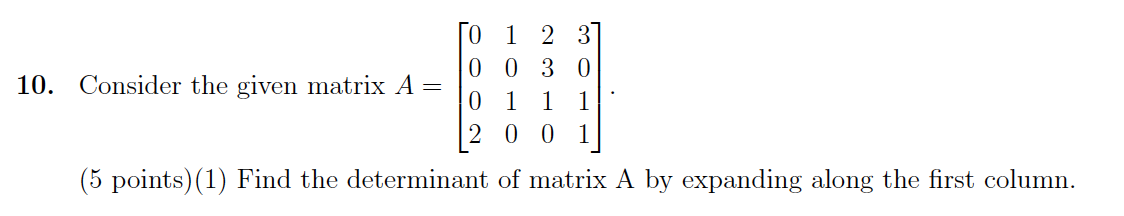

For computing the determinant of matrix \(A\) with the 1st column cofactor expansion, note that the only nonzero entry in column 1 is \(a_{1,4}=2\), so we have \[\begin{align} \det A&=(-1)^{1+4}\cdot 2\cdot\begin{vmatrix}1 &2 &3\\0 &\color{fuchsia}3 &0\\1 &1 &1\end{vmatrix}\\ &=(-2)\cdot 3\begin{vmatrix}1 &3\\1 &1\end{vmatrix}=(-6)\cdot(-2)=12 \end{align}\]

From the adjugate of \(A\), we deduce the formula

\[\begin{align} b_{3,2}&=\frac{C_{2,3}}{\det A}=\frac{1}{12}\cdot(-1)^{2+3}\begin{vmatrix}0 &1 &3\\0 &1 &1\\\color{fuchsia}2 &0 &1\end{vmatrix}\\ &=\frac{-1}{12}\cdot(-1)^{3+1}\cdot 2\begin{vmatrix}1 &3\\1 &1\end{vmatrix}=\frac{1}{3} \end{align}\]

Exam Summary

Here is the table listing the key knowledge points for each problem in this exam:

| Problem # | Points of Knowledge |

|---|---|

| 1 | Matrix Multiplications, Inverse Matrix |

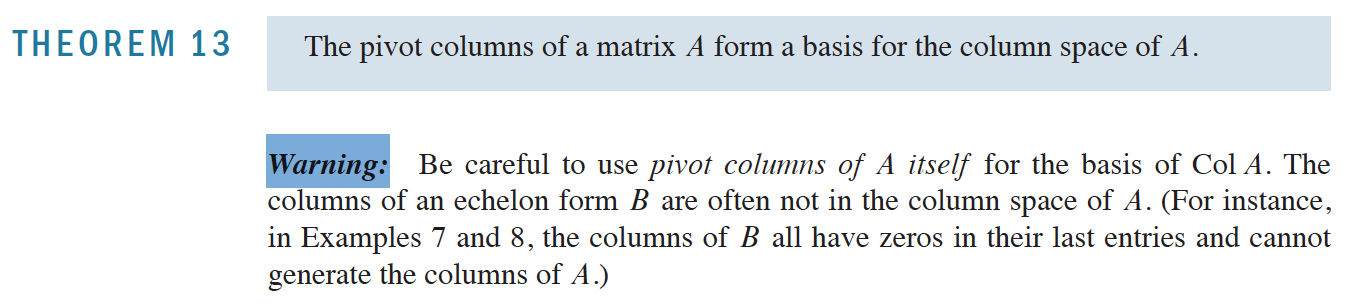

| 2 | Column Space, Rank, Nul Space, Determinant, Pivot, Linear System Consistency |

| 3 | Linear Transformation, One-to-One Mapping |

| 4 | Linear Dependency, Vector Set Span \(\mathbb R^n\), Unique Solution |

| 5 | Linear Transformation, One-to-One Mapping, Rank, Column Linear Independency, Vector Set Span \(\mathbb R^n\) |

| 6 | Basis of Span \({v_1, v_2}\) |

| 7 | Linear Independency Vector Set |

| 8 | Row Echelon Form, Augmented Matrix, Linear System Solution Set and Consistency |

| 9 | Reduced Row Echelon Form, Basis for the Null Space |

| 10 | Determinant, Cofactor Expansion, Inverse Matrix, The Adjugate of Matrix |

As can be seen, it has a good coverage of the topics of the specified sections from the textbook. Students should carefully review those to prepare for this and similar exams.

Common Errors and Warnings

Here are a few warnings collected from the textbook. It is highly recommended that students preparing for the MA 265 Midterm I exam review these carefully to identify common errors and know how to prevent them in the test.

The Matrix Equation

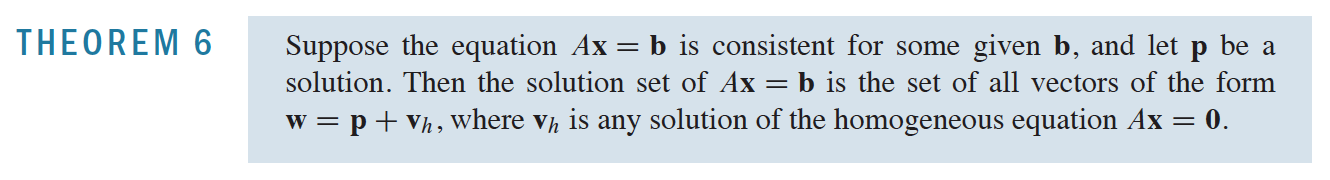

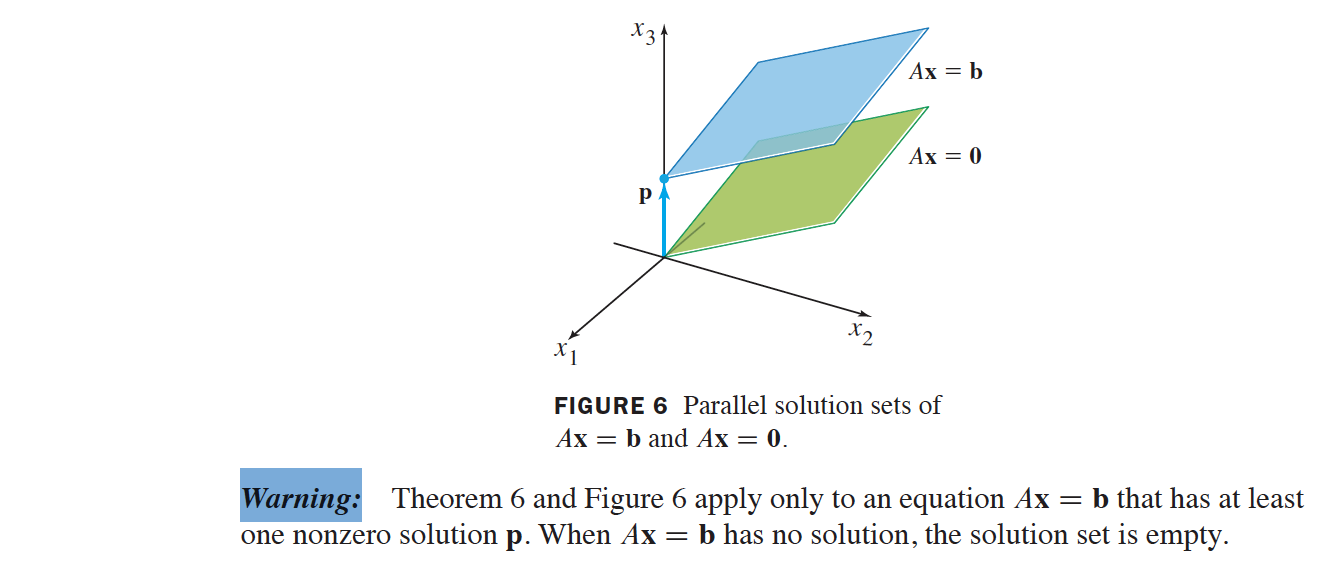

Solution Sets of Linear System

Linear Independence

Matrix Operations

Subspace of \(\mathbb R^N\)

Properties of Determinants