Purdue MA 26500 Spring 2023 Midterm II Solutions

Here comes the solution and analysis for Purdue MA 26500 Spring 2023 Midterm II. This second midterm covers topics in Chapter 4 (Vector Spaces) and Chapter 5 (Eigenvalues and Eigenvectors) of the textbook.

Introduction

Purdue Department of Mathematics provides a linear algebra course MA 26500 every semester, which is mandatory for undergraduate students of almost all science and engineering majors.

Textbook and Study Guide

Disclosure: This blog site is reader-supported. When you buy through the affiliate links below, as an Amazon Associate, I earn a tiny commission from qualifying purchases. Thank you.

MA 26500 textbook is Linear Algebra and its Applications (6th Edition) by David C. Lay, Steven R. Lay, and Judi J. McDonald. The authors have also published a student study guide for it, which is available for purchase on Amazon as well.

Exam Information

MA 26500 midterm II covers the topics of Sections 4.1 – 5.7 in the textbook. It is usually scheduled at the beginning of the thirteenth week. The exam format is a combination of multiple-choice questions and short-answer questions. Students are given one hour to finish answering the exam questions.

Based on the knowledge of linear equations and matrix algebra learned in the book chapters 1 and 2, Chapter 4 leads the student to a deep dive into the vector space framework. Chapter 5 introduces the important concepts of eigenvectors and eigenvalues. They are useful throughout pure and applied mathematics. Eigenvalues are also used to study differential equations and continuous dynamical systems, they provide critical information in engineering design,

Reference Links

- Purdue Department of Mathematics Course Achive

- Purdue MA 26500 Spring 2024

- Purdue MA 26500 Exam Archive

Spring 2023 Midterm II Solutions

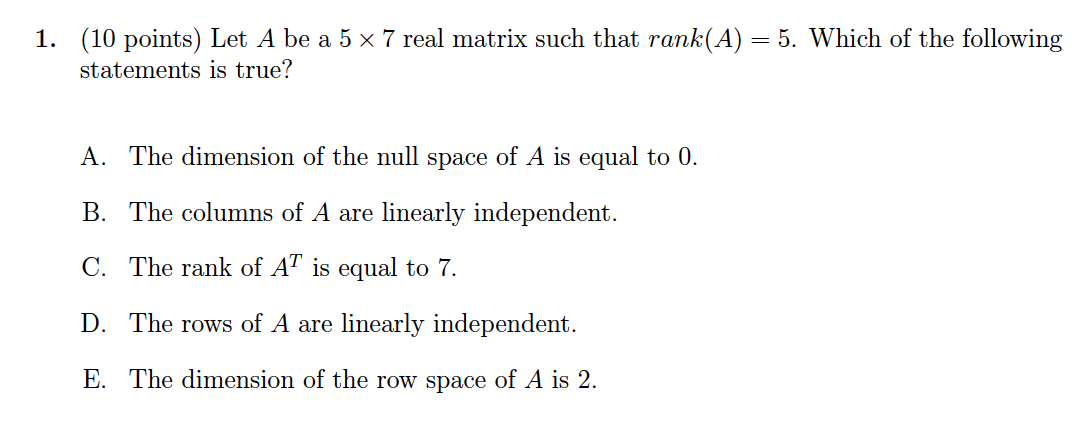

Problem 1 (10 points)

Problem 1 Solution

A For \(5\times 7\) matrix, if \(rank(A)=5\), the dimension of the null space is \(7-5=2\). So this is wrong.

B The matrix has 7 columns, but there are only 5 pivot columns, so the columns of \(A\) are NOT linearly independent. It is wrong.

C \(A^T\) is a \(7\times 5\) matrix, and the rank of \(A^T\) is no more than 5. This statement is wrong.

D Because there are 5 pivots, each row has one pivot. Thus the rows of \(A\) are linearly independent. This statement is TRUE.

E From statement D, it can be deduced that the dimension of the row space is 5, not 2.

The answer is D.

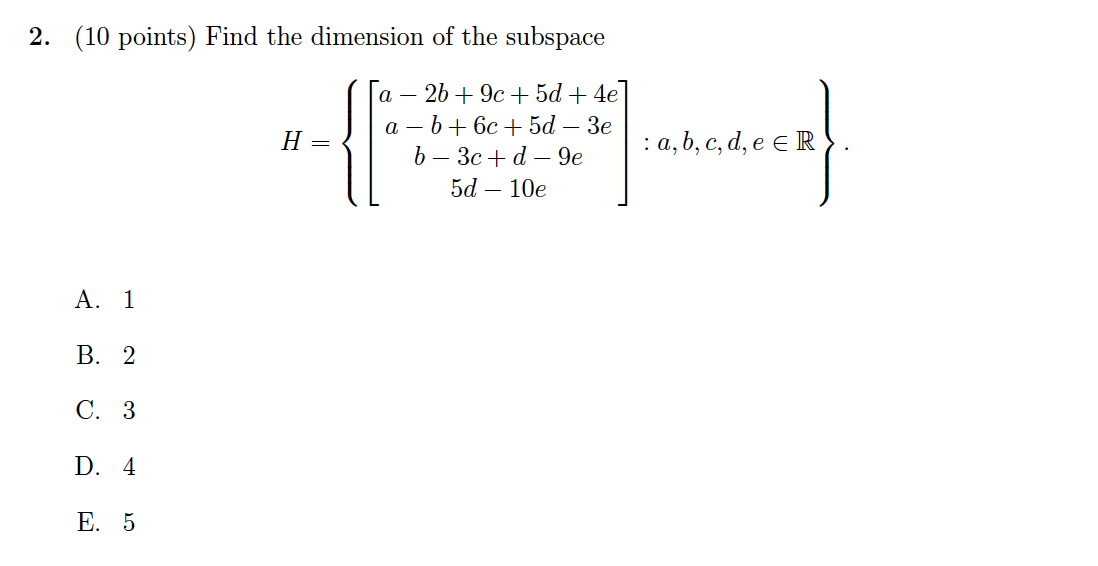

Problem 2 (10 points)

Problem 2 Solution

The vector in this subspace \(H\) can be represented as \[ a\begin{bmatrix}1\\1\\0\\0\end{bmatrix}+ b\begin{bmatrix}-2\\-1\\1\\0\end{bmatrix}+ c\begin{bmatrix}9\\6\\-3\\0\end{bmatrix}+ d\begin{bmatrix}5\\5\\1\\5\end{bmatrix}+ e\begin{bmatrix}4\\-3\\-9\\-10\end{bmatrix} \]

Here the transformation matrix \(A\) has 5 columns and each has 4 entries. Hence these column vectors are not linearly independent.

Note that row operations do not affect the dependence relations between the column vectors. This makes it possible to use row reduction to find a basis for the column space.

\[ \begin{align} &\begin{bmatrix}1 &-2 &9 &5 &4\\1 &-1 &6 &5 &-3\\0 &1 &-3 &1 &-9\\0 &0 &0 &5 &-10\end{bmatrix}\sim \begin{bmatrix}1 &-2 &9 &5 &4\\0 &1 &-3 &0 &-7\\0 &1 &-3 &1 &-9\\0 &0 &0 &5 &-10\end{bmatrix}\\ \sim&\begin{bmatrix}1 &-2 &9 &5 &4\\0 &1 &-3 &0 &-7\\0 &0 &0 &1 &-2\\0 &0 &0 &5 &-10\end{bmatrix}\sim \begin{bmatrix}\color{fuchsia}1 &-2 &9 &5 &4\\0 &\color{fuchsia}1 &-3 &0 &-7\\0 &0 &0 &\color{fuchsia}1 &-2\\0 &0 &0 &0 &0\end{bmatrix} \end{align} \]

The dimension of \(H\) is the number of linearly independent columns of the matrix, which is the number of pivots in \(A\)'s row echelon form. So the dimension is 3.

The answer is C.

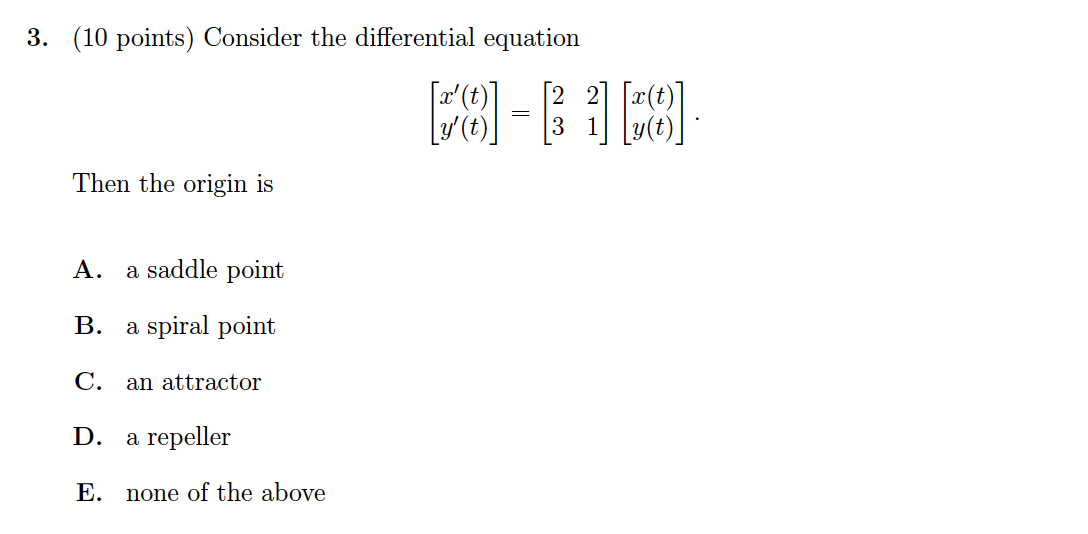

Problem 3 (10 points)

Problem 3 Solution

First, find the eigenvalues for the matrix \[ \begin{align} \det A-\lambda I &=\begin{vmatrix}2-\lambda &2\\3 &1-\lambda\end{vmatrix}=(\lambda^2-3\lambda+2)-6\\&=\lambda^2-3\lambda-4=(\lambda+1)(\lambda-4)=0 \end{align} \] The above gives two real eigenvalues \(-1\) and \(4\). Since they have opposite signs, the origin is a saddle point.

The answer is A.

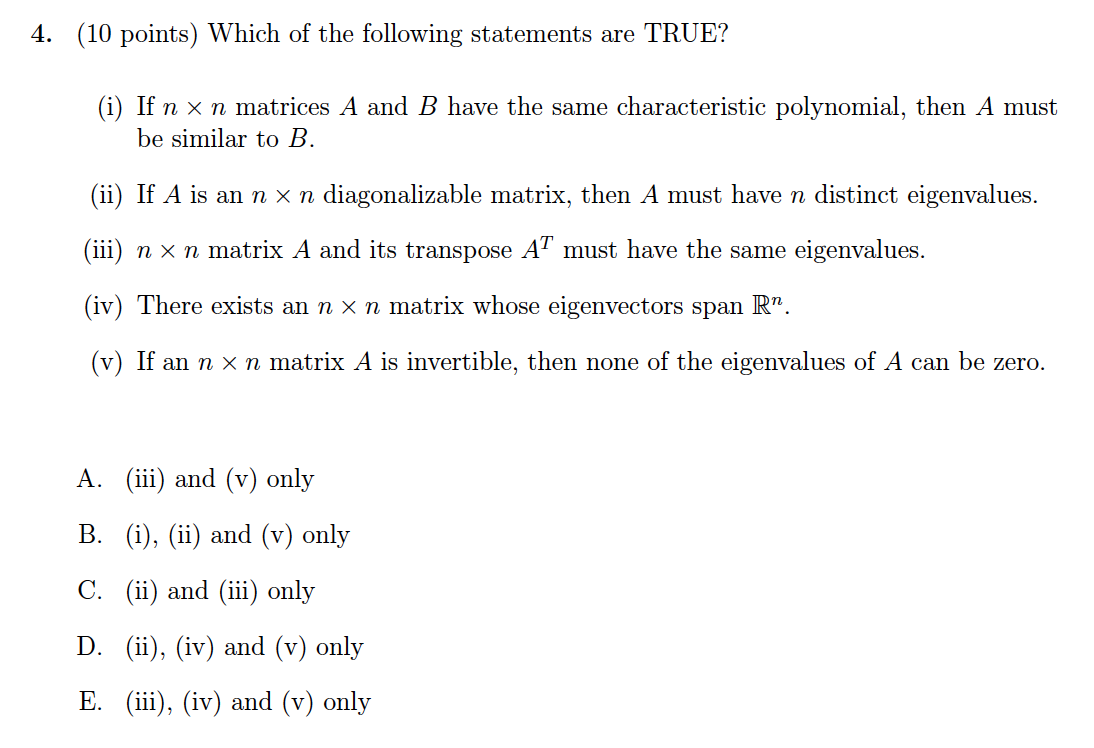

Problem 4 (10 points)

Problem 4 Solution

(i) is NOT true. Referring to Theorem 4 of Section 5.2 "The Characteristic Equation",

If \(n\times n\) matrices \(A\) and \(B\) are similar, then they have the same characteristic polynomial and hence the same eigenvalues (with the same multiplicities).

But the reverse statement is NOT true. They are matrices that are not similar even though they have the same eigenvalues.

(ii) is NOT true either. Referring to Theorem 6 of Section 5.3 "Diagonalization",

An \(n\times n\) matrix with \(n\) distinct eigenvalues is diagonalizable.

The book mentions that the above theorem provides a sufficient condition for a matrix to be diagonalizable. So the reverse statement is NOT true. There are examples that a diagonalizable matrix has eigenvalues with multiplicity 2 or more.

(iii) Since the identity matrix is symmetric, and \(\det A=\det A^T\) for \(n\times n\) matrix, we can write \(\det (A-\lambda I) = \det (A-\lambda I)^T = \det(A^T-\lambda I)\). So matrix \(A\) and its transpose have the same eigenvalues. This statement is TRUE.

(iv) This is definitely TRUE as we can find eigenvectors that are linearly independent and span \(\mathbb R^n\).

(v) If matrix \(A\) has zero eigenvalue, \(\det A-0I=\det A=0\), it is not invertible. This statement is TRUE.

In summary, statements (iii), (iv), and (v) are TRUE. The answer is E.

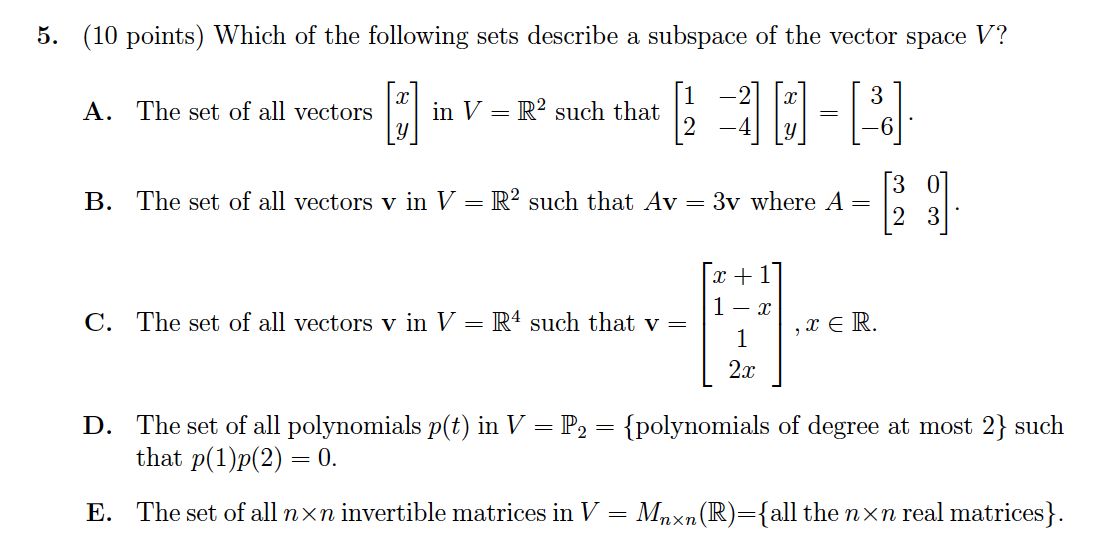

Problem 5 (10 points)

Problem 5 Solution

A This vector set does not include zero vector (\(x = y = 0\)). So it is not a subspace of \(V\).

B For eigenvalue 3, we can find out the eigenvector from \(\begin{bmatrix}0 &0\\2 &0\end{bmatrix}\pmb v=\pmb 0\), it is \(\begin{bmatrix}0\\\ast\end{bmatrix}\). All vectors in this set satisfy three subspace properties. So this one is good.

C This cannot be the right choice. Since the 3rd entry is always 1, the vector set cannot be closed under vector addition and multiplication by scalars. Also, it does not include zero vector either.

D For \(p(x)=a_0+a_1x+a_2x^2\) and \(p(1)p(2)=0\), this gives \[(a_0+a_1+a_2)(a_0+2a_1+4a_2)=0\] To verify if this is closed under vector addition. Define \(q(x)=b_0+b_1x+b_2x^2\) that has \(q(1)q(2)=0\), this gives \[(b_0+b_1+b_2)(b_0+2b_1+4b_2)=0\] Now let \(r(x)=p(x)+q(x)=c_0+c_1x+c_2x^2\), where \(c_i=a_i+b_i\) for \(i=0,1,2\). Is it true that \[(c_0+c_1+c_2)(c_0+2c_1+4c_2)=0\] No, it is not necessarily the case. This one is not the right choice either.

E Invertible matrix indicates that its determinant is not 0. The all-zero matrix is certainly not invertible, so it is not in the specified set. Moreover, two invertible matrices can add to a non-invertible matrix, such as the following example \[ \begin{bmatrix}2 &1\\1 &2\end{bmatrix}+\begin{bmatrix}-2 &1\\-1 &-2\end{bmatrix}=\begin{bmatrix}0 &2\\0 &0\end{bmatrix} \] This set is NOT a subspace of \(V\).

The answer is B.

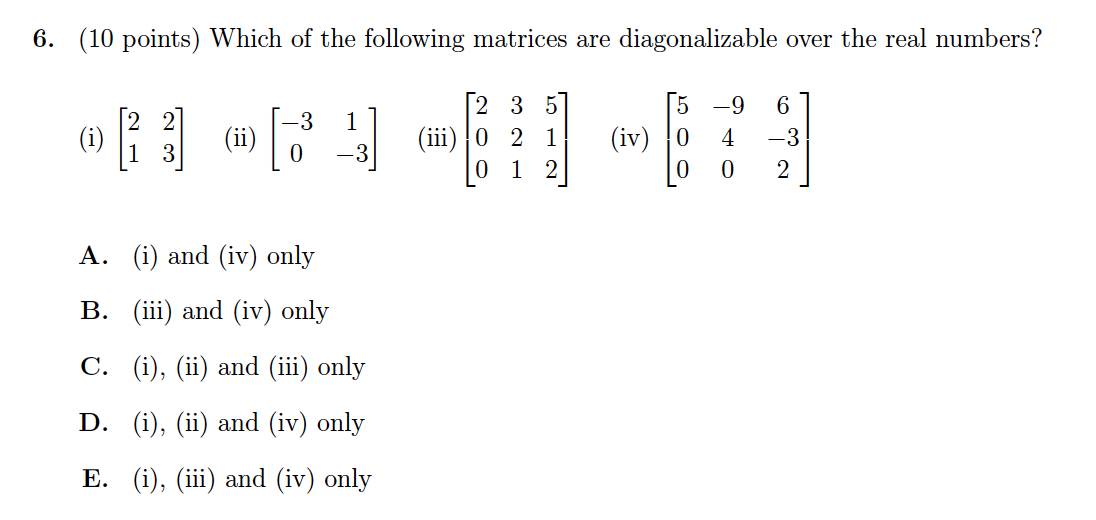

Problem 6 (10 points)

Problem 6 Solution

Recall from the Problem 4 solution that a matrix with \(n\) distinct eigenvalues is diagonalizable.

(i) The following calculation shows this matrix has two eigenvalues 4 and 1. So it is diagonalizable. \[\begin{vmatrix}2-\lambda &2\\1 &3-\lambda\end{vmatrix}=(\lambda^2-5\lambda+6)-2=(\lambda-1)(\lambda-4)=0\]

(ii) It is easy to see that there is one eigenvalue \(-3\) with multiplicity 2. However, we can only get one eigenvector \(\begin{bmatrix}1\\0\end{bmatrix}\) for such eigenvalue. So it is NOT diagonalizable.

(iii) To find out the eigenvalues for this \(3\times 3\) matrix, do the calculation as below \[ \begin{vmatrix}2-\lambda &3 &5\\0 &2-\lambda &1\\0 &1 &2-\lambda\end{vmatrix}=(2-\lambda)\begin{vmatrix}2-\lambda &1\\1 &2-\lambda\end{vmatrix}=(2-\lambda)(\lambda-3)(\lambda-1) \] So we get 3 eigenvalues 2, 3, and 1. This matrix is diagonalizable.

(iv) This is an upper triangular matrix, so the diagonal entries (5, 4, 2) are all eigenvalues. As this matrix has three distinct eigenvalues, it is diagonalizable.

Since only (ii) is not diagonalizable, the answer is E.

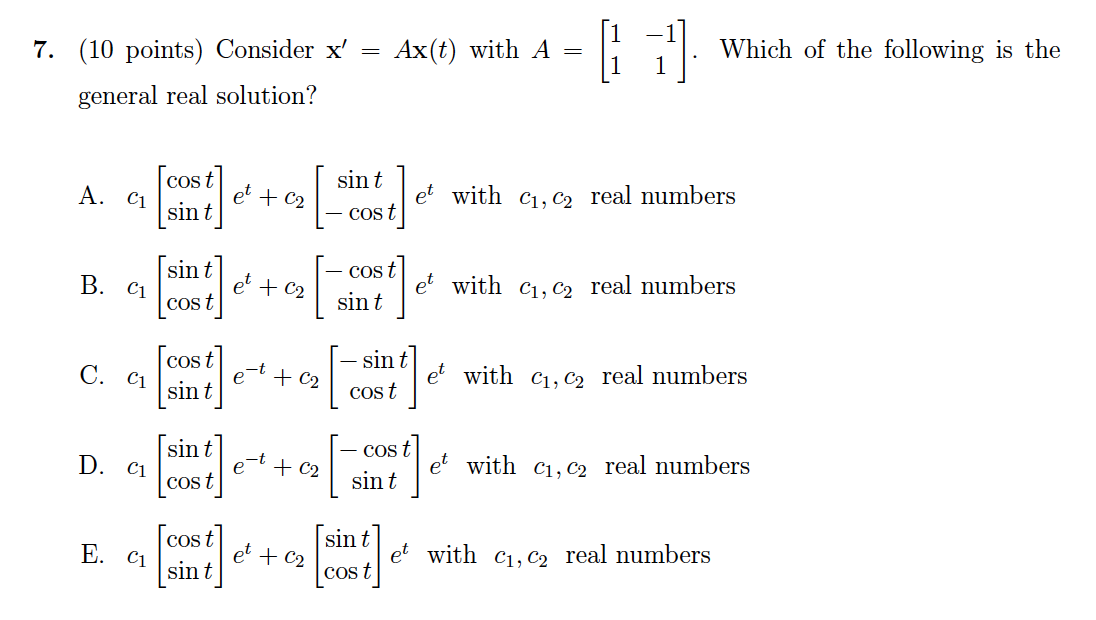

Problem 7 (10 points)

Problem 7 Solution

This problem involves complex eigenvalues.

Step 1: Find the eigenvalue of the given matrix \[ \begin{vmatrix}1-\lambda &-1\\1 &1-\lambda\end{vmatrix}=\lambda^2-2\lambda+2=0 \] Solve this with the quadratic formula \[ \lambda=\frac {-b\pm {\sqrt {b^{2}-4ac}}}{2a}=\frac {-(-2)\pm {\sqrt {(-2)^2-4\times 1\times 2}}}{2\times 1}=1\pm i \]

Step 2: Find the corresponding eigenvector for \(\lambda=1+i\) \[ \begin{bmatrix}-i &-1\\1 &-i\end{bmatrix}\sim\begin{bmatrix}0 &0\\1 &-i\end{bmatrix} \] This gives \(x_1=ix_2\), so the eigervector can be \(\begin{bmatrix}i\\1\end{bmatrix}\).

Step 3: Generate the real solution

From Section 5.7 "Applications to Differential Equations", we learn that the general solution to a matrix differential equation is \[\pmb x(t)=c_1\pmb{v}_1 e^{\lambda_1 t}+c_2\pmb{v}_2 e^{\lambda_2 t}\] For a real matrix, complex eigenvalues and associated eigenvectors come in conjugate pairs. The real and imaginary parts of \(\pmb{v}_1 e^{\lambda_1 t}\) are (real) solutions of \(\pmb x'(t)=A\pmb x(t)\), because they are linear combinations of \(\pmb{v}_1 e^{\lambda_1 t}\) and \(\pmb{v}_2 e^{\lambda_2 t}\). (See the proof in "Complex Eigenvalues" of Section 5.7)

Now use Euler's formula (\(e^{ix}=\cos x+i\sin x\)), we have \[\pmb{v}_1 e^{\lambda_1 t}=e^t(\cos t+i\sin t)\begin{bmatrix}i\\1\end{bmatrix}\\ =e^t\begin{bmatrix}-\sin t+i\cos t\\\cos t+i\sin t\end{bmatrix}\] The general REAL solution is the linear combination of the REAL and IMAGINARY parts of the result above, it is \[c_1 e^t\begin{bmatrix}-\sin t\\\cos t\end{bmatrix}+ c_2 e^t\begin{bmatrix}\cos t\\\sin t\end{bmatrix}\]

At first glance, none on the list matches our answer above. However, let's inspect this carefully. We can exclude C and D first since they both have \(e^{-t}\) that is not in our answer. Next, it is impossible to be E because it has no minus sign.

Now between A and B, which one is most likely to be the right one? We see that B has \(-\cos t\) on top of \(\sin t\). That could not match our answer no matter what \(c_2\) is. If we switch \(c_1\) and \(c_2\) of A and inverse the sign of the 2nd vector, A would become the same as our answer. Since \(c_1\) and \(c_2\) are just scalars, this deduction is reasonable.

So the answer is A.

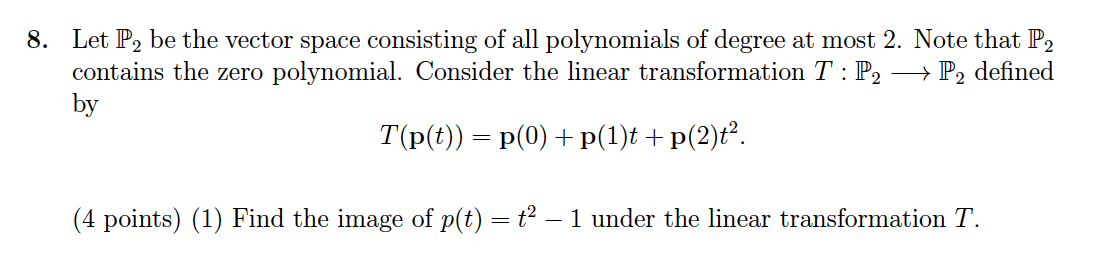

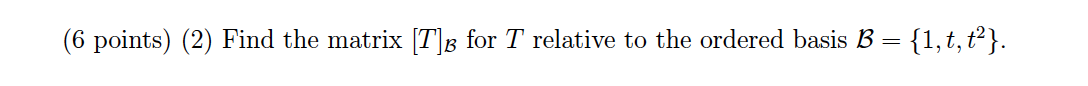

Problem 8 (10 points)

Problem 8 Solution

(1) Directly apply \(p(t)=t^2-1\) to the mapping function \[T(t^2-1)=0^2-1+(1^2-1)t+(2^2-1)t^2=-1+3t^2\]

(2) Denote \(p(t)=a_0+a_1t+a_2t^2\), \(T(p(t))=b_0+b_1t+b_2t^2\), then \[ T(a_0+a_1t+a_2t^2)=a_0+(a_0+a_1+a_2)t+(a_0+2a_1+4a_2)t^2 \] So \[ \begin{align} a_0 &&=b_0\\ a_0 &+ a_1 + a_2 &=b_1\\ a_0 &+ 2a_1 + 4a_2 &=b_2 \end{align} \] This gives the \([T]_B=\begin{bmatrix}1 &0 &0\\1 &1 &1\\1 &2 &4\end{bmatrix}\).

Alternatively, we can form the same matrix with the transformation of each base vector: \[\begin{align} T(1)&=1+t+t^2 => \begin{bmatrix}1\\1\\1\end{bmatrix}\\ T(t)&=0+t+2t^2 => \begin{bmatrix}0\\1\\2\end{bmatrix}\\ T(t^2)&=0+t+4t^2 => \begin{bmatrix}0\\1\\4\end{bmatrix}\ \end{align}\]

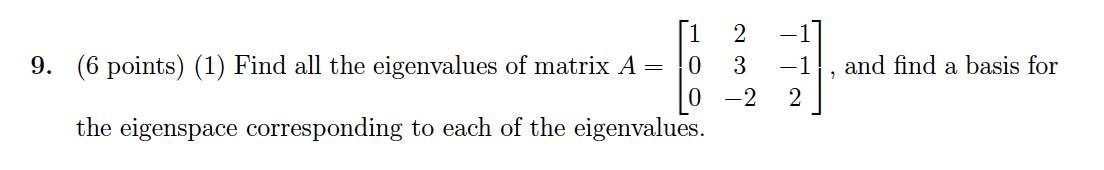

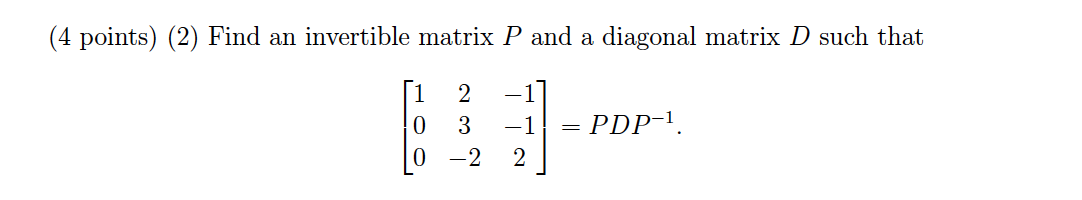

Problem 9 (10 points)

Problem 9 Solution

(1) Find the eigenvalues with \(\det (A-\lambda I)=0\) \[ \begin{vmatrix}1-\lambda &2 &-1\\0 &3-\lambda &-1\\0 &-2 &2-\lambda\end{vmatrix}=(1-\lambda)\begin{vmatrix}3-\lambda &-1\\-2 &2-\lambda\end{vmatrix}=(1-\lambda)(\lambda-4)(\lambda-1) \] So there are \(\lambda_1=\lambda_2=1\), and \(\lambda_3=4\).

Next is to find the eigenvectors for each eigenvalue

For \(\lambda_1=\lambda_2=1\), apply row reduction to the agumented matrix of the system \((A-\lambda I)\pmb x=\pmb 0\) \[ \begin{bmatrix}0 &2 &-1 &0\\0 &2 &-1 &0\\0 &-2 &1 &0\end{bmatrix}\sim \begin{bmatrix}0 &2 &-1 &0\\0 &0 &0 &0\\0 &0 &0 &0\end{bmatrix} \] With two free variables \(x_1\) and \(x_2\), we get \(x_3=2x_2\). So the parametric vector form can be written as \[ \begin{bmatrix}x_1\\x_2\\x_3\end{bmatrix}= x_1\begin{bmatrix}1\\0\\0\end{bmatrix}+x_2\begin{bmatrix}0\\1\\2\end{bmatrix} \] So the eigenvectors are \(\begin{bmatrix}1\\0\\0\end{bmatrix}\) and \(\begin{bmatrix}0\\1\\2\end{bmatrix}\).

For \(\lambda_3=4\), follow the same process \[ \begin{bmatrix}-3 &2 &-1 &0\\0 &-1 &-1 &0\\0 &-2 &-2 &0\end{bmatrix}\sim \begin{bmatrix}3 &-2 &1 &0\\0 &1 &1 &0\\0 &0 &0 &0\end{bmatrix} \] With one free variable \(x_3\), we get \(x_1=x_2=-x_3\). So the eigenvector can be written as \(\begin{bmatrix}1\\1\\-1\end{bmatrix}\) (or \(\begin{bmatrix}-1\\-1\\1\end{bmatrix}\)).

(2) We can directly construct \(P\) from the vectors in last step, and construct \(D\) from the corresponding eigenvalues. Here are the answers: \[ P=\begin{bmatrix}\color{fuchsia}1 &\color{fuchsia}0 &\color{blue}1\\\color{fuchsia}0 &\color{fuchsia}1 &\color{blue}1\\\color{fuchsia}0 &\color{fuchsia}2 &\color{blue}{-1}\end{bmatrix},\; D=\begin{bmatrix}\color{fuchsia}1 &0 &0\\0 &\color{fuchsia}1 &0\\0 &0 &\color{blue}4\end{bmatrix} \]

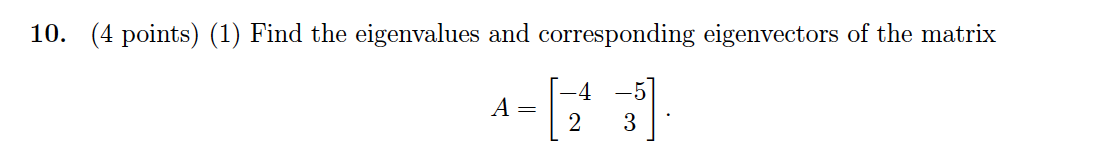

Problem 10 (10 points)

Problem 10 Solution

(1) Find the eigenvalues with \(\det (A-\lambda I)=0\)

\[\begin{vmatrix}-4-\lambda &-5\\2 &3-\lambda\end{vmatrix}=(\lambda^2+\lambda-12)+10=(\lambda+2)(\lambda-1)=0\] So there are two eigervalues \(-2\) and 1. Next is to find the eigenvectors for each eigenvalue.

For \(\lambda=-2\), the matrix becomes \[\begin{bmatrix}-2 &-5\\2 &5\end{bmatrix}=\begin{bmatrix}0 &0\\2 &5\end{bmatrix}\] This yields eigen vector \(\begin{bmatrix}5\\-2\end{bmatrix}\).

For \(\lambda=1\), the matrix becomes \[\begin{bmatrix}-5 &-5\\2 &2\end{bmatrix}=\begin{bmatrix}0 &0\\1 &1\end{bmatrix}\] This yields eigen vector \(\begin{bmatrix}1\\-1\end{bmatrix}\).

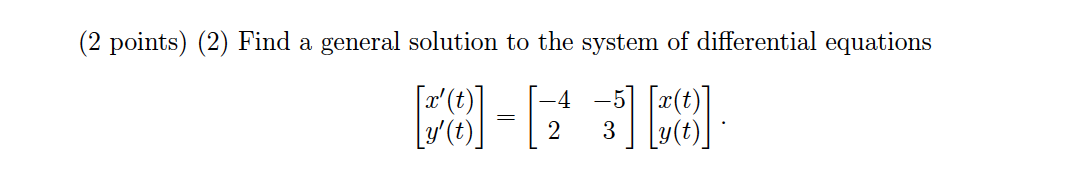

(2) The general solution to a matrix differential equation is \[\pmb x(t)=c_1\pmb{v}_1 e^{\lambda_1 t}+c_2\pmb{v}_2 e^{\lambda_2 t}\] So from this, since we already found out the eigenvalues and the corresponding eigenvectors, we can write down \[ \begin{bmatrix}x(t)\\y(t)\end{bmatrix}=c_1\begin{bmatrix}5\\-2\end{bmatrix}e^{-2t}+c_2\begin{bmatrix}1\\-1\end{bmatrix}e^t \]

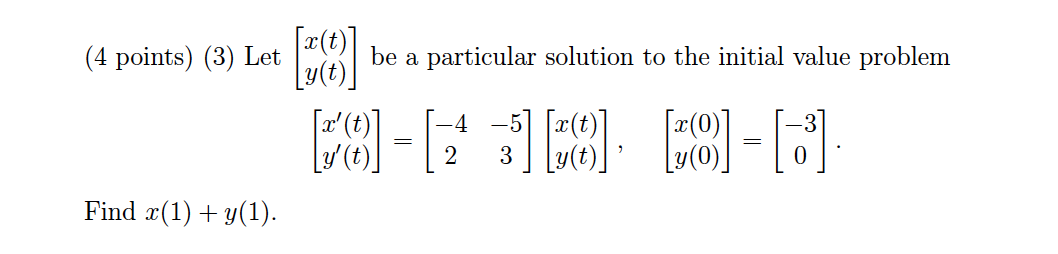

(3) Apply the initial values of \(x(0)\) and \(y(0)\), here comes the following equations: \[\begin{align} 5c_1+c_2&=-3\\ -2c_1-c_2&=0 \end{align}\] This gives \(c_1=-1\) and \(c_2=2\). So \(x(1)+y(1)=-5e^{-2}+2e^1+2e^{-2}-2e^{-1}=-3e^{-2}\).

Summary

Here are the key knowledge points covered by this exam:

- Linear dependency, Rank, and dimension of null space

- Vector Space, Subspace Properties, and Basis

- Eigenvalues, eigenvectors, and the origin graph

- Similar matrices and diagonalization

- Applications to Differential Equations